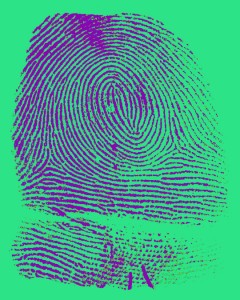

In the digital age, fingerprints are recorded using scanners, rather than ink pads and paper. As with any new technology, the question of quality control arises. Variables ranging from a subject’s dry skin to a dirty sensor plate can produce an image that could yield an incorrect match with a fingerprint on file. The U.S. National Institute of Standards and Technology (NIST) developed a program that judges the quality of a scanned fingerprint, and indicates if a fingertip should be rescanned. NIST released the software to U.S. law enforcement agencies and biometrics researchers.

Often, films and TV shows depict a technician who declares a fingerprint match based on computer analysis. In reality, an expert makes the final decision about a match by comparing fingerprints. This eliminates computer error, but introduces human error.

The National Institute of Justice and NIST assessed the effects of human factors on forensic latent print analysis and drafted recommendations to reduce the risk of error. A copy of the 2012 report, “Latent Print Examination and Human Factors: Improving the Practice through a Systems Approach,” is available from the National Institute of Justice website. The NIJ also offers a flow chart that shows the Analysis, Comparison, Evaluation, and Verification (ACE-V) process for latent print examination used in national forensic crime labs.

Kasey Wertheim and Melissa Taylor’s article, “Human Factors in Latent-print Examination,” is another good source for information on causes for fingerprint analysis errors. The article was published in the January-February 2012 edition of Evidence Technology Magazine.